Big Data as word describes is data that is too large to process using traditional methods.It originated with companies who had the problem of querying very large distributed semi or structured data. Google developed MapReduce to support distributed computing on large data sets on computer clusters. As discussed in earlier post, few examples of Big Data are:

- Petabytes of data

- Billions of records

- distributed data

- Flat files (cannot be seen in relation DB)

- Semi structure data like log files

- Video messages

Applications that produce or generate Big-data can be:

- Transactional/operational (CRM,ERP,Sales,HR),

- Analytics (IT logs,Call Centre)

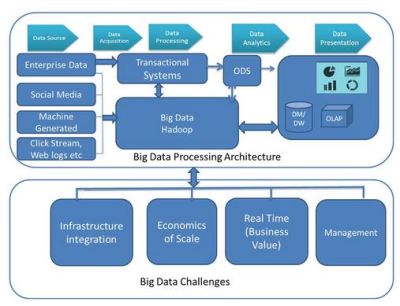

Big Data Architecture is set of few components joined to each other as shown in below image. Hadoop is present in middle tier of this structure, but not mandatory requirement.

Will discuss the components further in next tutorial blogs.

Bottlenecks with Big Data are :

- Storage

- Transfer

- Sharing

- Analysis

- Processing

- Visualization

- Security

Big data is not just about size

–Finds insights from complex, noisy, heterogeneous, longitudinal, and voluminous data

–It aims to answer questions that were previously unanswered

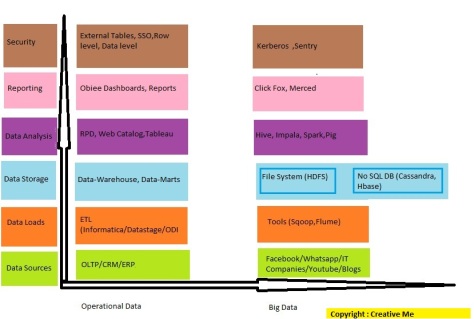

In our existing traditional approach, we use a Data-warehouse to store data (OLTP-OLAP) in structured format. Process it , do data mining and build reports for further high level analysis.This approach works fine with those applications that process less volume of data which can be accommodated by standard db servers, or up to the limit of the processor that is processing the data.

But when it comes to dealing with huge amounts of scale-able data, it becomes a problem to process it using this tradition approach.Transactional Big-data projects cannot use Hadoop, as it is not real-time.

For transactional systems that do not need a database transaction to have ACID properties (Atomicity, Consistency, Isolation,Durability), NoSQL databases can be used, though there are constraints such as restricting transactions to a single data item.

For big-data transactional SQL databases that need the ACID properties have less options.

This is when Big Data got Distributed System into picture. It most of all related to Map-Reduce technology.For example, 1 machine with 4 I/O channels can process 1 terabyte of data in approx 42 mins if the channel speed is 100 mb/s.

But if we have a distributed system of 100 machines, each with 4 I/O channels, and each channel speed is 100 mb/s, then it will take few sec to process the data.

To adopt distributed System, Map reduce algorithm(MR) was used.This algorithm divides the task into small parts and assigns them to many computers (cluster), and collects the results from them which when integrated, form the output data-set.

Using the above solution, Doug Cutting and his team developed an Open Source Project called HADOOP.

To proceed further and understand Hadoop ,its component & Architecture, please read my Next Blog

One thought on “Big Data Architecture & its Challenges (Tutorial Day2)”